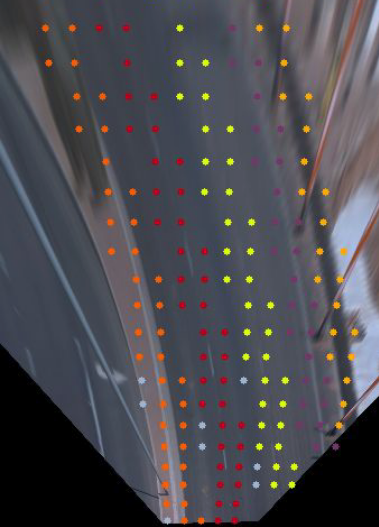

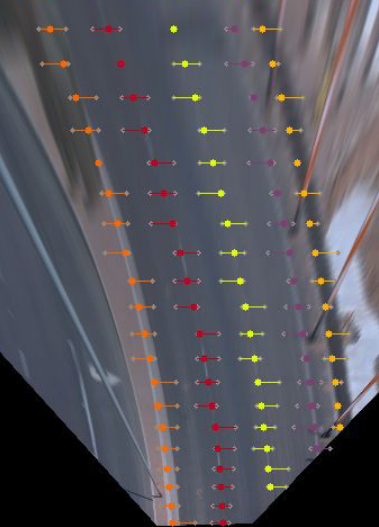

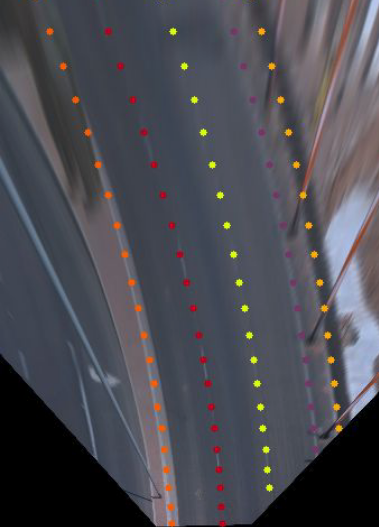

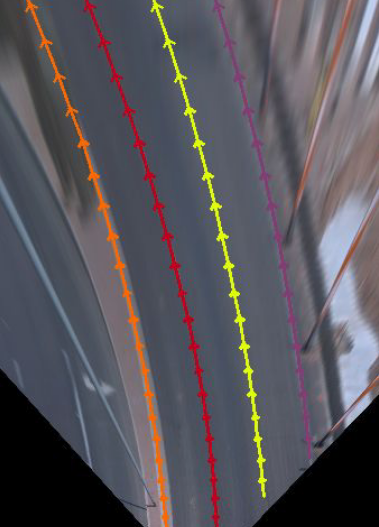

Figure: Overview of the GLane3D model architecture.

Figure: Overview of the GLane3D model architecture.

Accurate and efficient lane detection in 3D space is essential for autonomous driving systems, where robust generalization is the foremost requirement for 3D lane detection algorithms. Considering the extensive variation in lane structures worldwide, achieving high generalization capacity is particularly challenging, as algorithms must accurately identify a wide variety of lane patterns worldwide. Traditional top-down approaches rely heavily on learning lane characteristics from training datasets, often struggling with lanes exhibiting previously unseen attributes. To address this generalization limitation, we propose a method that detects keypoints of lanes and subsequently predicts sequential connections between them to construct complete 3D lanes. Each key point is essential for maintaining lane continuity, and we predict multiple proposals per keypoint by allowing adjacent grids to predict the same keypoint using an offset mechanism. PointNMS is employed to eliminate overlapping proposal keypoints, reducing redundancy in the estimated BEV graph and minimizing computational overhead from connection estimations. Our model surpasses previous state-of-the-art methods on both the Apollo and OpenLane datasets, demonstrating superior F1 scores and a strong generalization capacity when models trained on OpenLane are evaluated on the Apollo dataset, compared to prior approaches.

𝑀seg. These proposals are sampled within a lateral distance dₓ from the target lanes, increasing robustness to ensure that no keypoints are missed due to sparse or noisy predictions.𝑨, encoding which keypoints are connected in a sequential manner to represent continuous lane segments.| Method | Backbone | F1-Score ↑ | X-error near (m) ↓ | X-error far (m) ↓ | Z-error near (m) ↓ | Z-error far (m) ↓ |

|---|---|---|---|---|---|---|

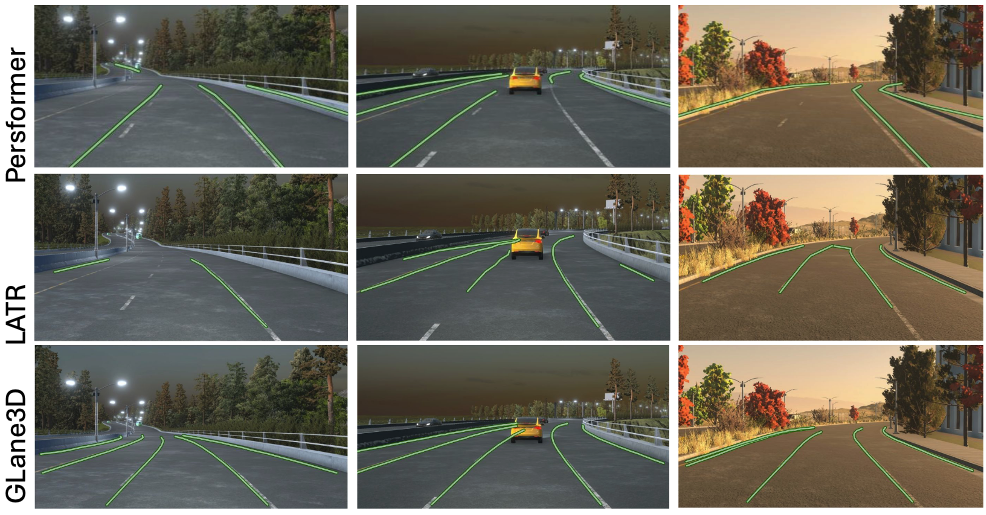

| PersFormer | EffNet-B7 | 50.5 | 0.485 | 0.553 | 0.364 | 0.431 |

| BEV-LaneDet | ResNet-34 | 58.4 | 0.309 | 0.659 | 0.244 | 0.631 |

| Anchor3DLane | EffNet-B3 | 53.7 | 0.276 | 0.311 | 0.107 | 0.138 |

| LATR | ResNet-50 | 61.9 | 0.219 | 0.259 | 0.075 | 0.104 |

| LaneCPP | EffNet-B7 | 60.3 | 0.264 | 0.310 | 0.077 | 0.117 |

| PVALane | ResNet-50 | 62.7 | 0.232 | 0.259 | 0.092 | 0.118 |

| PVALane | Swin-B | 63.4 | 0.226 | 0.257 | 0.093 | 0.119 |

| GLane3D-Lite (Ours) | ResNet-18 | 61.5 | 0.221 | 0.252 | 0.073 | 0.101 |

| GLane3D-Base (Ours) | ResNet-50 | 63.9 | 0.193 | 0.234 | 0.065 | 0.090 |

| GLane3D-Large (Ours) | Swin-B | 66.0 | 0.170 | 0.203 | 0.063 | 0.087 |

| Method | Backbone | F1-Score ↑ | X-error near (m) ↓ | X-error far (m) ↓ | Z-error near (m) ↓ | Z-error far (m) ↓ |

|---|---|---|---|---|---|---|

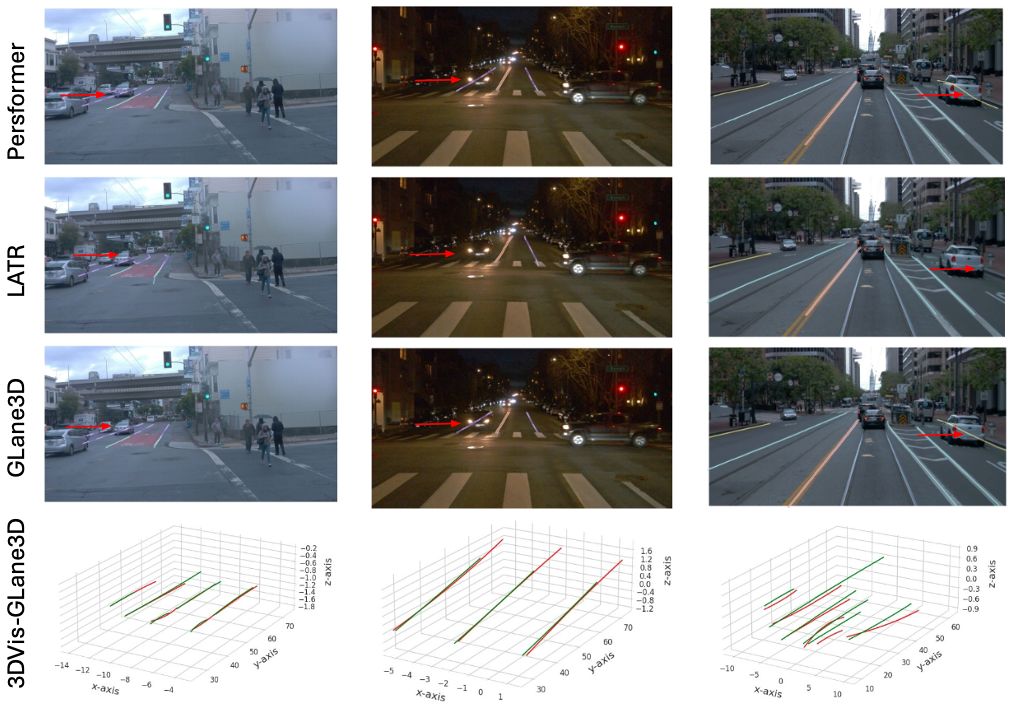

| PersFormer | EffNet-B7 | 36.5 | 0.343 | 0.263 | 0.161 | 0.115 |

| Anchor3DLane | EffNet-B3 | 34.9 | 0.344 | 0.264 | 0.181 | 0.134 |

| PersFormer | ResNet-50 | 43.2 | 0.229 | 0.245 | 0.078 | 0.106 |

| LATR | ResNet-50 | 54.0 | 0.171 | 0.201 | 0.072 | 0.099 |

| DV-3DLane(Camera) | ResNet-34 | 52.9 | 0.173 | 0.212 | 0.069 | 0.098 |

| GLane3D-Lite (Ours) | ResNet-18 | 53.8 | 0.182 | 0.206 | 0.070 | 0.095 |

| GLane3D-Base (Ours) | ResNet-50 | 57.9 | 0.157 | 0.179 | 0.067 | 0.087 |

| GLane3D-Large (Ours) | Swin-B | 61.1 | 0.142 | 0.167 | 0.061 | 0.084 |

| Dₜ | Methods | F1 | X-error (m) ↓ | Z-error (m) ↓ | ||

|---|---|---|---|---|---|---|

| Near | Far | Near | Far | |||

| 1.5m | PersFormer | 53.2 | 0.407 | 0.813 | 0.122 | 0.453 |

| LATR | 34.3 | 0.327 | 0.737 | 0.142 | 0.500 | |

| GLane3D | 58.9 | 0.289 | 0.701 | 0.086 | 0.479 | |

| 0.5m | PersFormer | 17.4 | 0.246 | 0.381 | 0.098 | 0.214 |

| LATR | 19.0 | 0.201 | 0.313 | 0.116 | 0.220 | |

| GLane3D | 42.6 | 0.162 | 0.296 | 0.063 | 0.198 | |

| Subset | Methods | Backbone | F1-Score (%) ↑ | AP (%) ↑ | X-error near (m) ↓ | X-error far (m) ↓ | Z-error near (m) ↓ | Z-error far (m) ↓ |

|---|---|---|---|---|---|---|---|---|

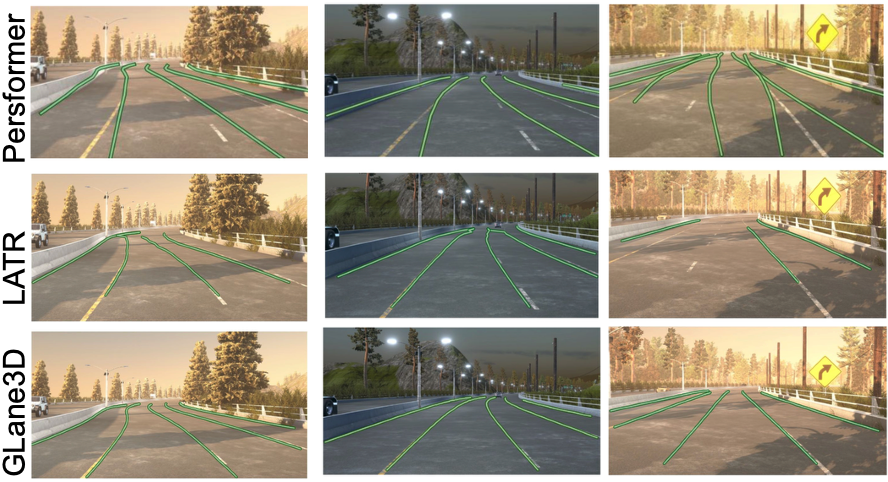

| Balanced Scene | PersFormer | EffNet-B7 | 92.9 | - | 0.054 | 0.356 | 0.010 | 0.234 |

| BEVLaneDet | ResNet-34 | 96.9 | - | 0.016 | 0.242 | 0.020 | 0.216 | |

| LaneCPP | EffNet-B7 | 97.4 | 99.5 | 0.030 | 0.277 | 0.011 | 0.206 | |

| LATR | ResNet-50 | 96.8 | 97.9 | 0.022 | 0.253 | 0.007 | 0.202 | |

| DV-3DLane | ResNet-50 | 96.4 | 97.6 | 0.046 | 0.299 | 0.016 | 0.213 | |

| GLane3D (Ours) | ResNet-50 | 98.1 | 98.8 | 0.021 | 0.250 | 0.007 | 0.213 | |

| Rare Scene | PersFormer | EffNet-B7 | 87.5 | - | 0.107 | 0.782 | 0.024 | 0.602 |

| BEVLaneDet | ResNet-34 | 97.6 | - | 0.031 | 0.594 | 0.040 | 0.556 | |

| LaneCPP | EffNet-B7 | 96.2 | 98.6 | 0.073 | 0.651 | 0.023 | 0.543 | |

| LATR | ResNet-50 | 96.1 | 97.3 | 0.050 | 0.600 | 0.015 | 0.532 | |

| DV-3DLane | ResNet-50 | 95.5 | 97.2 | 0.071 | 0.664 | 0.025 | 0.568 | |

| GLane3D (Ours) | ResNet-50 | 98.4 | 99.1 | 0.044 | 0.621 | 0.023 | 0.566 | |

| Visual Variations | PersFormer | EffNet-B7 | 89.6 | - | 0.074 | 0.430 | 0.015 | 0.266 |

| BEVLaneDet | ResNet-34 | 95.0 | - | 0.027 | 0.320 | 0.031 | 0.256 | |

| LaneCPP | EffNet-B7 | 90.4 | 93.7 | 0.054 | 0.327 | 0.020 | 0.222 | |

| LATR | ResNet-50 | 95.1 | 96.6 | 0.045 | 0.315 | 0.016 | 0.228 | |

| DV-3DLane | ResNet-50 | 91.3 | 93.4 | 0.095 | 0.417 | 0.040 | 0.320 | |

| GLane3D (Ours) | ResNet-50 | 92.7 | 94.8 | 0.046 | 0.364 | 0.020 | 0.317 |

@inproceedings{ozturk2025glane3d,

title={GLane3D: Detecting Lanes with Graph of 3D Keypoints},

author={{\"O}zt{\"u}rk, Halil {\.I}brahim and Kalfao{\u{g}}lu, Muhammet Esat and Kilinc, Ozsel},

booktitle={Proceedings of the Computer Vision and Pattern Recognition Conference},

pages={27508--27518},

year={2025}

}